Use distributed computing in the cloud to increase your analysis speed and get your results FAST

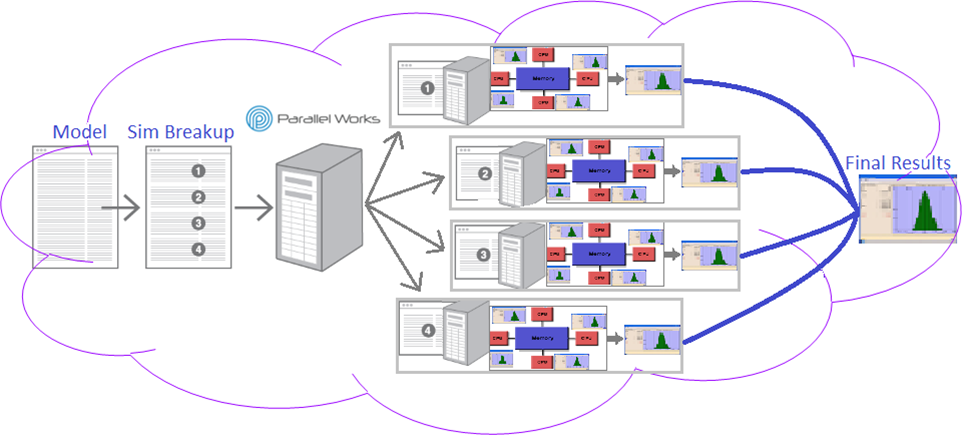

Distributed computing is a model in which an analysis is broken down into multiple threads. The threads are run on separate compute nodes on the cloud. Once complete, the results are combined to deliver a comprehensive output. By processing each thread in parallel, it increases the speed of the analysis - delivering results fast.

Parallel Works is an innovative cloud-based platform that empowers users with "personal supercomputers". The platform enables users to seamlessly run large-scale jobs in parallel - across hundreds to thousands of computer cores - leveraging the scale of the cloud at the click of a button.

Speed: Run analyses in parallel to speed time to solution

On-demand license: Need to work on your model on your desktop while a job is running? Offload it to the cloud and continue to use your license to model.

Unlimited hardware: Scale-up in the cloud based on the

Increase your processing power exponentially, while freeing up local licenses and machines

Magna Seating needed their analysis results quickly to make key decisions. In order to test Distributed Computing, they used four different models of increasing complexity and size. What does this all mean?

The bars show the amount of time the analysis took to complete

Threads: Whether Shared Memory was used (4) or not (1)

Concurrent: The number of computers used in the Cloud

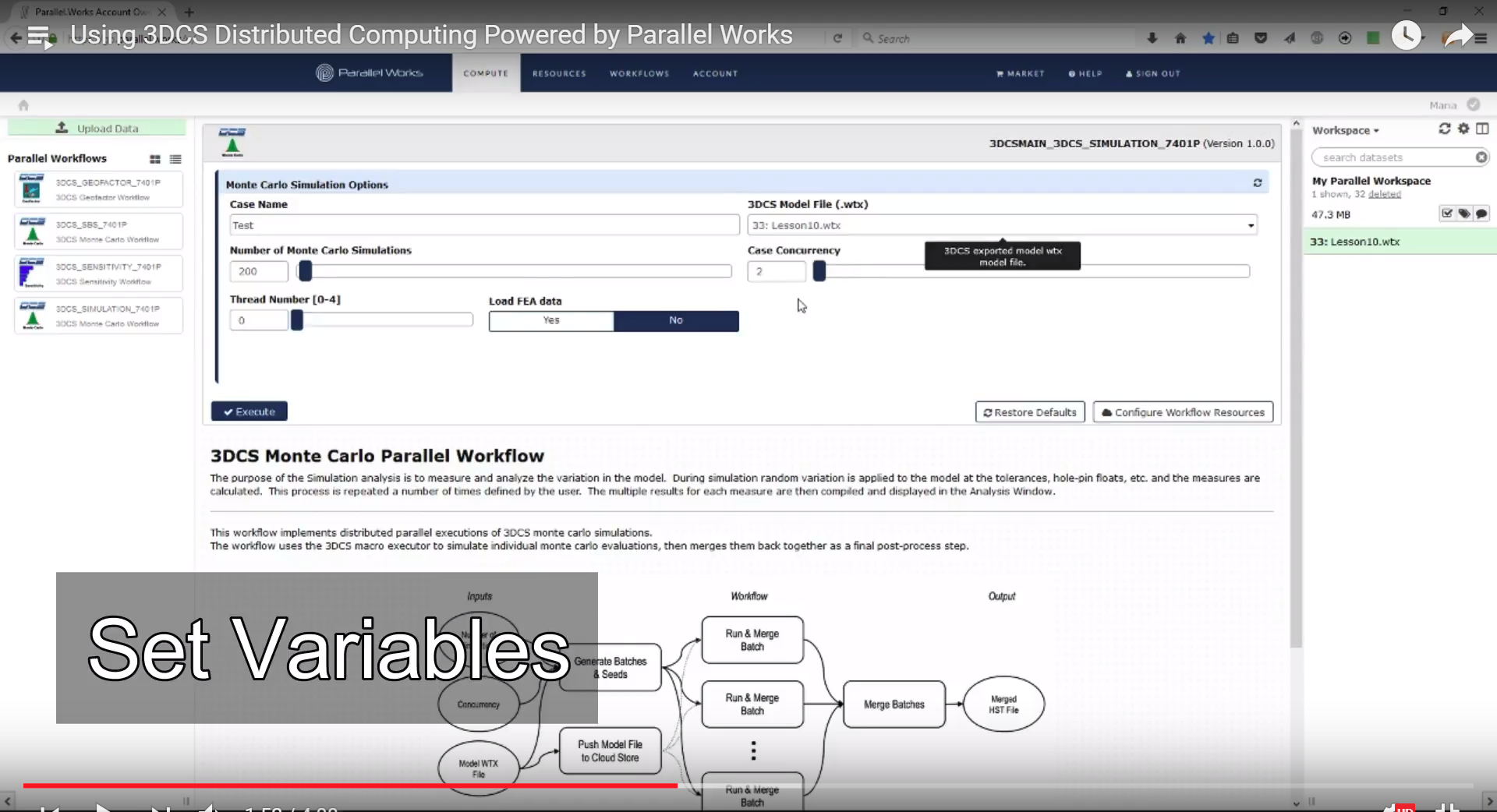

You only need two things to get started with Distributed Computing:

1. Distributed Computing Powered by Parallel Works Account

2. Your Model's WTX File - Export from any version of 3DCS

Your output will be an HST file that can be downloaded as a basic report or a raw file to import into 3DCS and display your results.

Doesn't matter which version of 3DCS you have.

Use Credits, and purchase additional ones as you need them.

Upload your results back into your model to view them in 3D.

Need more security? Set up your own system within your environment and utilize a group of machines to analyze your models.

Utilize as many cores as you want to give you your results as soon as you need them.

You only need two things to get started with Distributed Computing

1. Distributed Computing Powered by Parallel Works Account (Free Setup)

2. Your Model's WTX File - Click Here to Learn How to Export a WTX in all versions of 3DCS

-- Then set your concurrency (number of computers to use) and number of simulations

Your output will be an HST file that can be downloaded as a basic report, or a raw file to import into 3DCS and display your results.

Distributed Computing Powered by Parallel Works takes advantage of the new Shared Memory feature in the latest versions of 3DCS. This gives your analysis a further boost, enhancing the speed even more.

Standard Distributed Computing

- This process breaks the analysis into mutliple strings, runs those strings and then recombines the results into your final outputs. Each string is run on a separate computer in the Cloud, allowing the combined processing of all the machines to enhance the speed of the analysis.

Distributed Computing with Shared memory

- This process uses the standard Distributed Computing to break the analysis up amongst different computers on the Cloud, then further breaks the analysis up amongst the individual computer cores to increase the speed even more. For more information on Shared Memory, watch the webinar recording "Increase Your Analysis Speed with Shared and Distributed Computing".

Harness the power of our flexible system to create custom workflow topologies. This empowers users to simultaneously run a diverse range of analyses, while also significantly boosting the speed of complex and lengthy tasks (FEA Compliant Modeler).

For example, Design of Experiment for automated benchmarking and custom visualization in matplotlib.

Offload your analysis processing to the cloud, and let Distributed Computing handle the hardware and software requirements, while you continue your work. With no licenses or additional software required, you can begin using Distributed Computing right away. Purchase Credits as needed, allowing you to control how much or how little you want to use.

Magna Seating needed their analysis results quickly to make key decisions. In order to test Distributed Computing, they used four different models of increasing complexity and size. What does this all mean?

The bars show the amount of time the analysis took to complete

Threads: Whether Shared Memory was used (4) or not (1)

Concurrent: The number of computers used in the Cloud

With Shared Memory and Distributed Computing, a 16 hour analysis was reduced down to 10 minutes!